GLOBAL BEST PRACTICES FOR AZURE DATA FACTORY IMPLEMENTATION DUBAI– AUTO CHECKER SCRIPT V0.1

Building on the work done and detailed in my previous blog post (Best Practices for Implementing Azure Data Factory Dubai) I was tasked by my delightful boss to turn this content into a simple check list of what/why that others could use…. I slightly reluctantly did so. However, I wanted to do something better than simply transcribe the previous blog post into a check list. I therefore decided to breakout the Shell of Power and attempt to automate said check list. Sure, a check list could be picked up and used by anyone – with answers manually provided by the person doing the inspection of a given ADF resource. But what if there was a way to have the results given to you a plate and inferring things that aren’t always easy to spot via the Data Factory UI. Supported by friends in the community I had a poke around looking at ways such a PowerShell script could do this for Data Factory, also thinking about a way to objectify an entire ADF instance. Sadly, hitting an existing ADF instance, deployed in Azure wasn’t going to be an option:

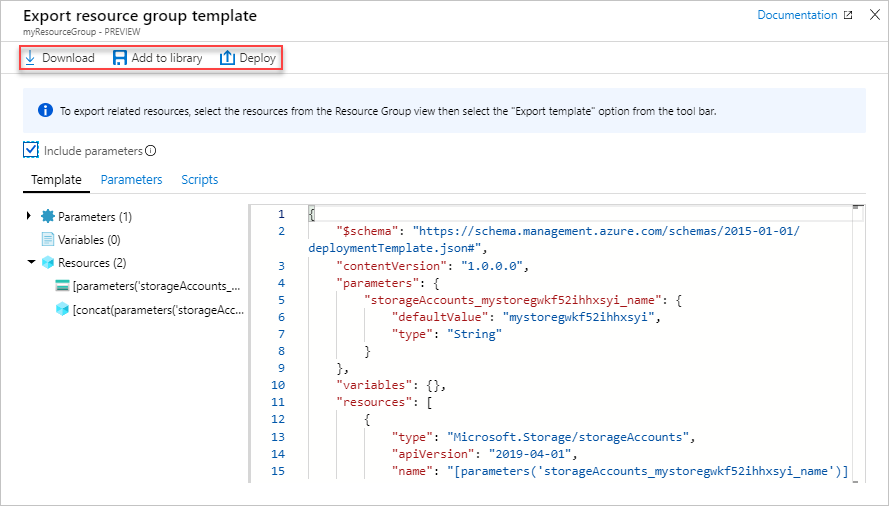

With the above frustrations in mind my current approach is to use the ARM template that you can manually export from the Data Factory developer UI. This can be downloaded and then used locally with PowerShell.

Once downloaded and unzipped, armed with this JSON file (arm_template.json) I could begin with everything I needed in a single place to query the target Data Factory export. As a starting point for this script, I’ve created a set of 21 logic tests/checks using PowerShell to return details about the Data Factory ARM template. This includes the following:

Circa 1000+ lines of PowerShell later… Each check as a severity rating (based on my experience) as in most cases there isn’t anything life threatening here. Its purely informational and based on the assumption that a complete round of integration testing has already been done for your Data Factory. The intention of the script is to improve on the basics and add quality to a development that goes beyond simple functionality. For example, a Linked Service may operate perfectly. But, if using Key Vault to handle the credentials, then that’s a better practice we should be working towards. Ok, enough preaching! Here is an example of the PowerShell script v0.1 output from one of my bosses Data Factory instances, certainly not one of mine!!